Search Engines automatically assign a specific rate of crawling for websites in their index. This rate depends on factors like how fast the webmaster update his site, the popularity of the pages etc. Though we cannot control the indexing pattern of Search Engine Spiders, there is a method to change the speed of crawling at least for Google spiders. This tutorial explains one method to change the current spider crawling rate if you feel the Google spiders crawl rate of your website is very low. To assign a specific crawling speed for Google spiders, you must have a verified webmaster tools account.

The first step to perform this action is to verify your webmaster tools account with the domain name for which you are going to change the spider crawling rate. In order to set custom crawling rate for your domain, follow the steps below.

New custom made crawl rate is valid for 90 days only. Please note that if Google sets a default crawling speed for your website, you cannot change it by yourself. For a domain with default indexing speed assigned by Google, you will not see the slider to alter the current crawling speed. So this tutorial will work for those lucky webmasters whose domains are still having freedom to change the current indexing speed. So don't waste your time thinking because you must start quickly before big G assign automatic value.

If you set the slider to slower side, big G robots will reduce its frequency to visit your site and there is a risk of losing the recent updates from their attention. It is OK for a website having a very rare content update like a static website but for a dynamic web portal I recommend you to move the slider to faster side. though robots will visit your site very often and consume server bandwidth, they will not miss any recent updates.

Steps to Manually Change Google Crawling rate Of Websites

The first step to perform this action is to verify your webmaster tools account with the domain name for which you are going to change the spider crawling rate. In order to set custom crawling rate for your domain, follow the steps below.

- Login to your Google Webmaster Tools Account. Use the link to login http://www.google.com/webmastertools

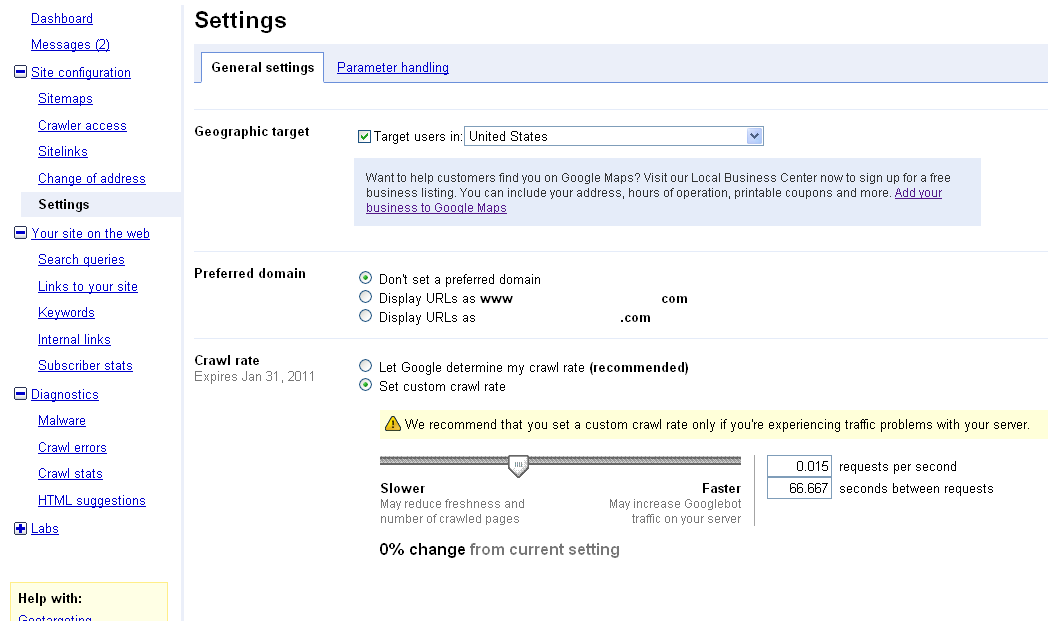

- Click on Settings (on the left-hand side of the page)

- Select set custom crawl rate

Use the slider to change the automatic speed. If you move the slider left, spiders will visit your website in less frequency. If you want them very often on your web pages, you should move the slider to right. Do not forget to save the current changes you have made.

New custom made crawl rate is valid for 90 days only. Please note that if Google sets a default crawling speed for your website, you cannot change it by yourself. For a domain with default indexing speed assigned by Google, you will not see the slider to alter the current crawling speed. So this tutorial will work for those lucky webmasters whose domains are still having freedom to change the current indexing speed. So don't waste your time thinking because you must start quickly before big G assign automatic value.

If you set the slider to slower side, big G robots will reduce its frequency to visit your site and there is a risk of losing the recent updates from their attention. It is OK for a website having a very rare content update like a static website but for a dynamic web portal I recommend you to move the slider to faster side. though robots will visit your site very often and consume server bandwidth, they will not miss any recent updates.

Related Articles

- How to optimize blogger blog for better Google Rank

- How to Improve HubPages Score

- How to Calculate Cost Per click

- Tips to Popularize Real Estate Site

Thanks for sharing. Search engine optimization is indeed one of the most crucial areas in Internet marketing, it is a perfect bridge between technology and business.

ReplyDeleteI still have a question if its a good practice to increase it manually or not?

ReplyDeleteIf you feel the Google spider crawl rate in your site is very low, it is better increase it.

ReplyDelete